We are Universal Transfer Learning (UTL) lab at Korea University with Prof. Donghyun Kim. Our research pursuits are situated under the expansive umbrella of transfer learning, with a particular emphasis on investigating the transferability, generalization, and adaptability of robust artificial intelligence (AI) models across a wide array of AI domains and disciplines.

Our overarching objective is to pioneer the creation of highly effective transfer learning algorithms that can seamlessly transcend the boundaries of disparate domains and modalities found within a multitude of fields. These algorithms will be specifically tailored to cater to a wide spectrum of real-world applications, thus driving innovation and advancements in various industries and sectors.

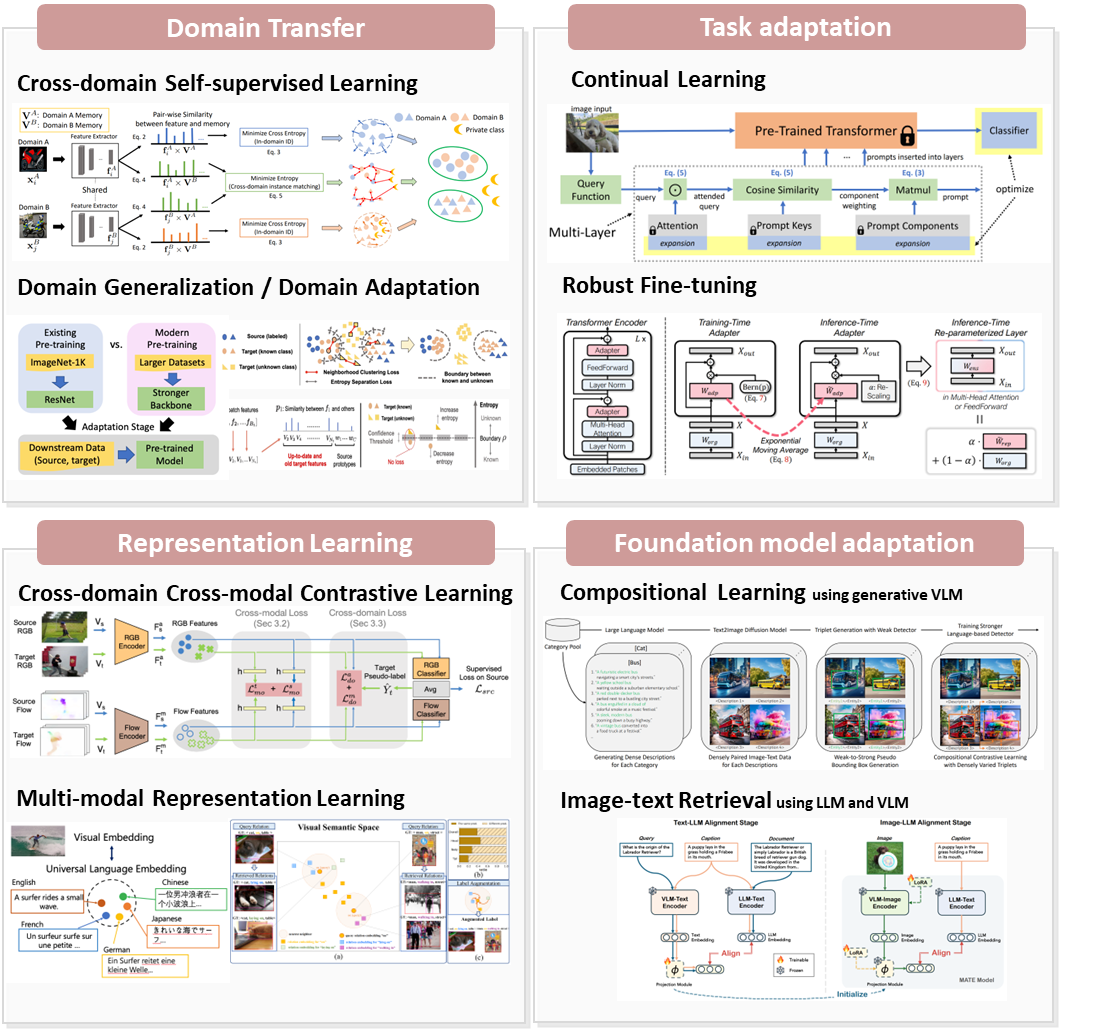

Our main research interests include but not limited to the following:

We are looking for passionate new MS, MS/PhD, PhD students or Postdocs to join the team (more info) !

Jan. 2026

A paper has been accepted to Engineering Applications of Artificial Intelligence (EAAI). (JCR IF Top 3%)

Jan. 2026

A paper has been accepted to European Chapter of the Association for Computational Linguistics (EACL).

Dec. 2025

Donghyun Kim has been awarded the Outstanding Advisor Award in the WISET Graduate Women Engineers Research Team Program.

Dec. 2025

A paper has been accepted to International Journal of Computer Vision (IJCV). (JCR IF Top 3%)

Dec. 2025

Sojung An, Sangwook Lee, and Hyunjee Song have been awarded the Minister of Science and ICT Award in the WISET Graduate Women Engineers Research Team Program.

Nov. 2025

Sojung An and Dong-Hee Kim have been awarded the AI SeoulTech Graduate School Scholarship.

Aug. 2025

Jungmyung Wi wins 2nd place in the 2025 Samsung Collegiate Programming Challenge (2/1445). Congratulations!

Aug. 2025

A paper has been accepted to Scientific Reports. (JCR IF Top 25%)

Jul. 2025

A paper has been accepted to International Journal of Computer Vision (IJCV). (JCR IF Top 3%)